LeanIX VSM Integration (pathfinder-based, deprecated)

Introduction

This documentation is deprecated

Please refer to the updated version of the VSM Discovery integration.

The LeanIX VSM integration offers an automatic way to integrate discovered software artifacts from VSM into your LeanIX Enterprise Architecture Management (EAM) workspace. This will allow you to:

- Use aggregated information on your discovered software artifacts to drive decision making within EAM

- Provide a seamless end-to-end view in EAM on your IT Landscape to support your business transformation use cases

Setup

The workspace-to-workspace connector works with Integration Hub to scan the source workspace and process to LDIF and automatically triggers the transformation of the incoming data (via LDIF) by means of an Inbound Integration API processor.

This integration is set up on the EAM workspace.

Enable the connector on your workspace

Please contact your CSM to enable this connector on your workspace

Configuration

- In your EAM workspace go to Administration > Integration Hub

- Create a new data source by clicking on New Data source

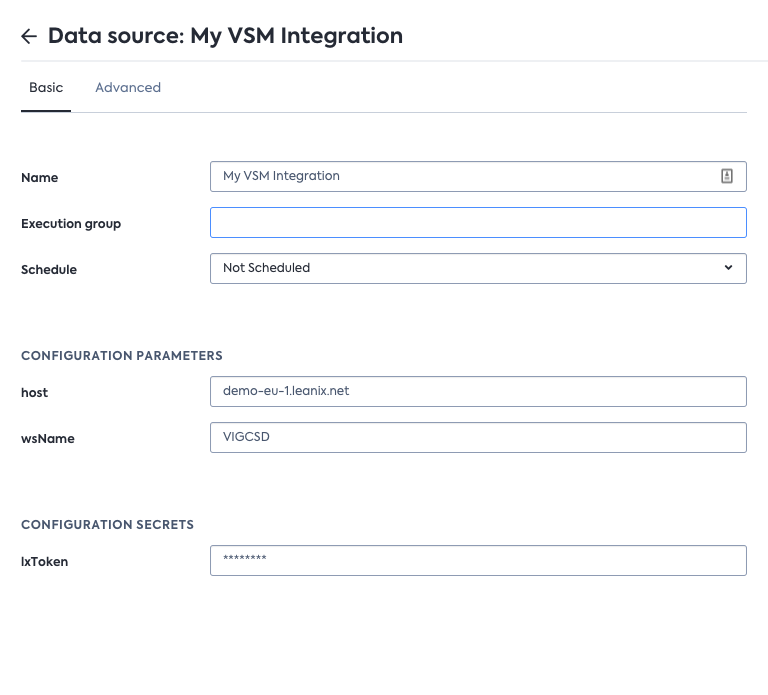

- Enter the following details:

- Connector Name:

vsm-vsmSync-connector - Data source name: any name to identify the data source

- Connector Name:

- Enter the following attributes under the Configuration Parameters:

- host: your hostname e.g. demo-eu-1.leanix.net

- wsName: the VSM workspace name

- fetchSoftwareArtifactsOfCategories: List of software artifact categories to be synced to EAM (this is an optional attribute)

- Enter the following attributes under the Configuration Secrets:

- lxToken: API token of a Technical User in the VSM workspace - the technical user needs Admin rights for this integration to work learn more

Your setup should look similar to:

Sample setup of the data source

- To test if the integration is set up correctly click on Test connector.

Scheduling your integration

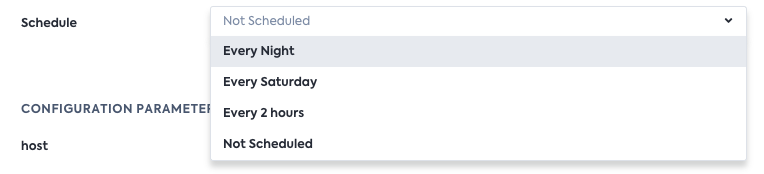

To run the integration on a schedule - you can specify the schedule via the schedule parameter in the data source config.

The recommendation is to run this integration once a day.

Scheduling options

Imported data

The connector is suggested to be set on a daily schedule but can be adjusted to individual needs. The table below states the information that is synchronized across the workspaces:

| VSM | EAM |

|---|---|

| Any Software Artifact Fact Sheet (including subcategories) | IT Component Fact Sheet of category Software Artifact |

| Relation between Product Fact Sheet and Software Artifact Fact Sheet | This information is used to map relations between respective Application Fact Sheet to respective IT Component Fact Sheet |

| Category of Software Artifact Fact Sheet e.g. Microservice etc. | Single-select attribute (label: Software Artifact Type) on IT Component Fact Sheet of category Software Artifact. |

| Factsheet URL | backlink to VSM Fact Sheet under resources |

tag VSM on Application & IT Component Fact Sheet of category Software Artifact Type | |

| Product Fact sheet | Application Fact Sheet (If createApplicationsFromVSMOnlyProducts flag is set to true) |

The table below states what information is removed/ deleted from the EAM workspace when it's counterpart in VSM is deleted

| VSM | EAM |

|---|---|

| Any Software Artifact Fact Sheet (including subcategories) is archived | Backlink URL on the corresponding IT Component Fact Sheet of category Software Artifact is deleted. |

| Relation between Product Fact Sheet and Software Artifact Fact Sheet is deleted | Relations between respective Application Fact Sheet to respective IT Component Fact Sheet is deleted. |

| Product Fact sheet | Backlink URL on the corresponding Application Fact Sheet |

Leveraging the full power of the two integrations (EAM & VSM)

Setting this integration up along with the EAM integration (in VSM) will allow the system to automatically link your applications in EAM to their discovered software artifacts (brought in by the VSM integration as IT Components). Hence, this will allow for an automatic link between applications and IT components, subtype Software Artifact in EAM.

Requirement: you need to link Products in VSM to their respective Software Artifacts in VSM

Securing Data Reliance

Within the integration(s) between workspaces a leading system is defined for each Fact Sheet type and even individual attribute. This ensures data between workspaces is always in sync and does not need to be manually tracked or followed up. However, to bring this even further and make sourcing clearer for end users you can define as "read only" in LeanIX Self-Config in the respective workspace - this avoids user confusing completely as edits can only be made in the source workspace.

Including Public Cloud Data in the integration

To include Public Cloud Data discovered in your VSM workspace, please follow these instructions

Extending the Integration

You have the ability to extend (i.e. add more data to the integration) self-sufficiently. This is powered by the Integration API capability called execution groups, which will add your custom logic (custom processors with said execution group) to run as part of this integration.

The below steps show one example of how to go about this.

Extend exported LDIF from source EAM Workspace

Currently VSM integration exports Software Artifact Fact Sheets from a VSM Workspace, but if your organization is interested in exporting other types of Fact Sheets (Ex: Deployment or API Fact Sheets) and mapping them into EAM, this can be achieved by using execution groups. This customization is explained in the detail with the below example:

Important Notice

When creating custom processors, it is important to note that the added custom Integration API processors must all have "processingDirection": "inbound" and “processingMode": "full"

Examples

Example 1: Export Deployment Fact Sheets from VSM Workspace to Process Fact Sheets in EAM Workspace

Step 1

Create an inbound Integration API processor on the VSM workspace, which reads Deployment Fact Sheets and writes them to an LDIF. It is mandatory that the processorType is set to writeToLdif (see example below). No other type of processors is allowed. Find the sample processor below which exports Deployment Fact Sheets deploymentId, name, description, other fields could be included if required.

{

"processors": [

{

"processorType": "writeToLdif",

"processorName": "exportDeployment",

"processorDescription": "exportDeployment",

"filter": {

"advanced": "${integration.contentIndex==0}"

},

"identifier": {

"search": {

"scope": {

"ids": [],

"facetFilters": [

{

"keys": [

"Deployment"

],

"facetKey": "FactSheetTypes",

"operator": "AND"

}

]

},

"filter": "${lx.factsheet.type == 'Deployment'}",

"multipleMatchesAllowed": true

}

},

"updates": [

{

"key": {

"expr": "content.id"

},

"mode": "selectFirst",

"values": [

{

"expr": "${lx.factsheet.deploymentId}"

}

]

},

{

"key": {

"expr": "name"

},

"mode": "selectFirst",

"values": [

{

"expr": "${lx.factsheet.displayName}"

}

]

},

{

"key": {

"expr": "description"

},

"mode": "selectFirst",

"values": [

{

"expr": "${lx.factsheet.description}"

}

]

},

{

"key": {

"expr": "content.type"

},

"mode": "selectFirst",

"values": [

{

"expr": "${lx.factsheet.type}"

}

]

},

{

"key": {

"expr": "sourceWorkspaceBacklinkBaseUrl"

},

"mode": "selectFirst",

"values": [

{

"expr": "${header.customFields.sourceWorkspaceBacklinkBaseUrl}"

}

]

}

],

"enabled": true,

"read": {

"fields": [

"deploymentId",

"displayName",

"description"

],

"relations": {

"filter": [],

"targetFields": []

}

}

}

],

"variables": {},

"executionGroups": [

"leanix-ws2ws-vsm-export-inbound"

],

"targetLdif": {

"dataConsumer": {

"type": "leanixStorage"

},

"ldifKeys": [

{

"key": "connectorType",

"value": "leanix-vsm-connector"

},

{

"key": "connectorId",

"value": "leanix-ws2ws-vsmsync-connector"

},

{

"key": "processingMode",

"value": "full"

},

{

"key": "lxWorkspace",

"value": "${header.customFields.bindingKeyLxWorkspace}"

}

]

}

}

Step 2

After making sure the above Integration API processor is saved on the VSM workspace, we now create a new Integration API processor in the EAM workspace to import the above-exported data. It is important to specify the leanix-ws2ws-eam-import-inbound executionGroup in the executionGroupsarray, when creating this Integration API processor (see below processor config).

Below is a sample Integration API processor configuration, which imports the above-created Deployment content objects as “Process“ FS in the EAM workspace.

{

"processors": [

{

"processorType": "inboundFactSheet",

"processorName": "createProcessFS",

"processorDescription": "create Process FS from Deployment FS",

"type": "Process",

"filter": {

"exactType": "Deployment"

},

"identifier": {

"external": {

"id": {

"expr": "${content.id}"

},

"type": {

"expr": "externalId"

}

}

},

"run": 0,

"updates": [

{

"key": {

"expr": "name"

},

"values": [

{

"expr": "${data.name}"

}

]

}

],

"enabled": true

}

],

"variables": {},

"executionGroups": [

"leanix-ws2ws-eam-import-inbound"

]

}

Step 3

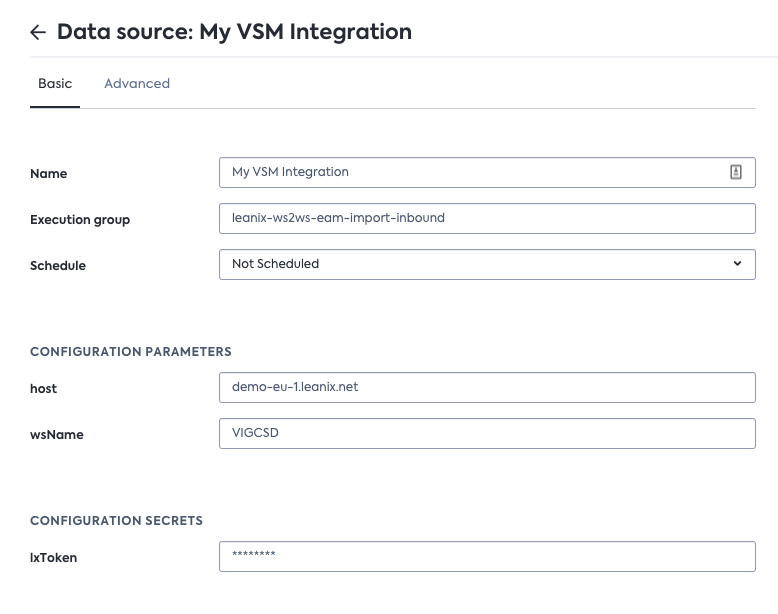

After making sure the custom Integration API processor config for importing the data is present on the EAM workspace, we must now either configure a new data source or change the existing data source in the EAM workspace to include the execution group (leanix-ws2ws-eam-import-inbound). This ensures that the integration run included your custom logic.

To enable the Execution group field in the UI below, you first need to go to Advanced tab and replace the bindingKey key with

"executionGroup": "leanix-ws2ws-eam-import-inbound"

You can specify the execution group in the data source config UI under Administration>Integration Hub.

Your configuration should look similar to below:

Full configuration of Data source

Updated over 1 year ago