Step 3: Evaluate AI-Related Risks

Evaluate the risks related to AI usage in applications to develop an effective risk mitigation strategy.

Evaluating risks associated with AI technologies is an essential part of an organization’s risk strategy. As a best practice, AI risk assessment should be part of regular work of risk managers.

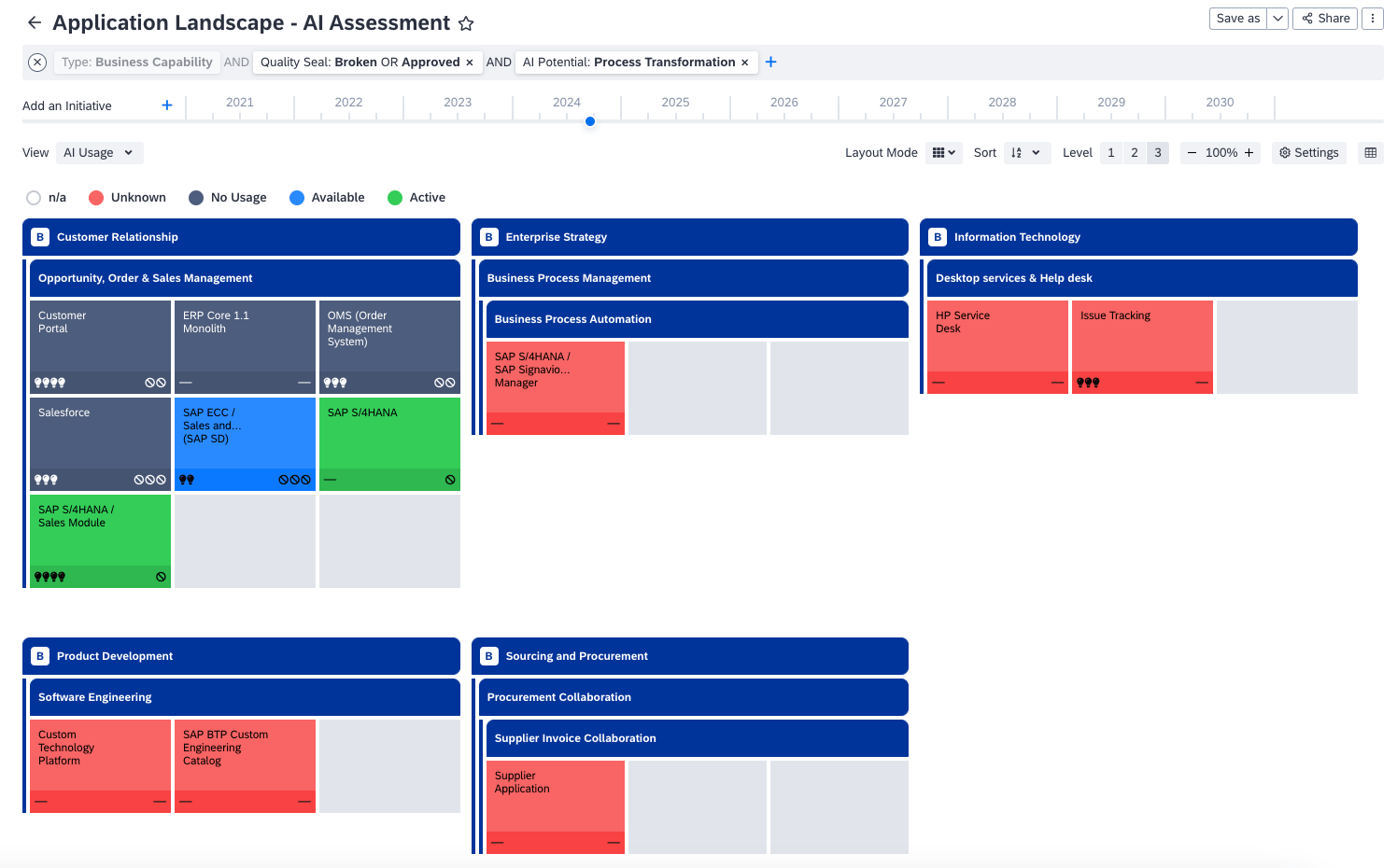

For this assessment, use the Application Landscape report with the AI Usage view applied, as in the previous step. In report settings, under Multiple Properties, set AI Risk as an additional property (for example, right property).

Application Landscape Report Clustered by Business Capabilities Used for Assessing AI Risks

To evaluate AI-related risks, you can proceed as follows:

- Assess AI risk: Start by identifying applications with the highest level of AI risk. These could be applications that handle sensitive data, have complex AI models, or lack robust security measures. Prioritize these applications for further investigation and risk mitigation actions.

- Cross-reference AI risk with AI usage: Applications with high risk and active AI usage need immediate attention to mitigate risks. Applications with high risk and unknown AI usage represent a significant unknown risk factor and should be investigated promptly.

- Cross-reference AI risk with AI potential: Applications with high risk and high potential need careful management to mitigate risks while maximizing potential. Conversely, applications with high risk and low potential may not be worth the risk and could be candidates for decommissioning.

By using the report, you can plan your risk management initiatives. Here are some best practices:

- Prioritize applications with high risk, high usage, and high potential: These applications should be your top priority because they have the potential to deliver significant value but also pose risks. They require robust governance policies and risk mitigation strategies.

- Address applications with high risk and unknown usage: These applications represent a significant risk. These could be shadow AI technologies that need to be brought under governance. Investigate these applications promptly and bring them under control.

- Review applications with minimal risk, high potential, and active usage: These applications are your AI champions because they're delivering high value with minimal risk. They should be used as models for AI adoption in other parts of your organization.

By using these strategies, you can gain valuable insights into AI risks in your organization and make informed decisions about AI governance and adoption.

Once you've assessed AI-related risks, you can identify which AI technologies that are currently in use are not compliant with your organization’s standards. See Step 4: Standardize AI Usage.

Updated 9 months ago